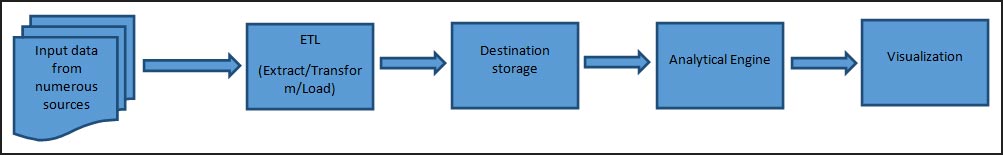

Bigdata is buzzword these days and every industry ranging from small scale to enterprise level is looking at it and trying to solve their problems and derive hidden insights from the data. More sophisticated algorithms, platforms, advanced tools from numerous vendors are getting released into the market and everyone is trying to solve problems in different way. Complete picture of Bigdata problem solving will go through the below mentioned phases.

Input Sources: Logs data, Streaming real time data, Sensor data, existing databases (MySql, Oracle etc.)

ETL Tools: Oracle BI Tools, Talend, Pentaho, Clover etc.

Destination Storage: HDFS, HBase, Cassandra, DynamoDB, AWS S3, GreenPlum, MongoDB etc.

Analytical Engine: R engine, Machine Learning engine etc.

Visualization: Tableau, Google charts, Leaflet, Highcharts etc.

ETL Tools: Oracle BI Tools, Talend, Pentaho, Clover etc.

Destination Storage: HDFS, HBase, Cassandra, DynamoDB, AWS S3, GreenPlum, MongoDB etc.

Analytical Engine: R engine, Machine Learning engine etc.

Visualization: Tableau, Google charts, Leaflet, Highcharts etc.

In the above picture, it says that the data has to get moved from source to destination, destination to analytical engines, engines to visualization tools.

If all the above steps are performed using a single platform so that data will not get moved into different location is the desired platform in this current world. This saves valuable resources like network, bandwidth, time and latencies. Databricks is the product that is getting emerged these days to solve this issue.

Features of Databricks product:

- Interactively analyze, visualize, and curate your data – big or small. Leverage Python, SQL, or Scala and seamlessly weave together advanced analytics, streaming data and interactive queries. Collaborate with colleagues in real-time to discover insights.

- Build live dashboards that capture insights from your data exploration. Allow colleagues to interact with and customize visualizations. Automatically update dashboards and underlying queries in response to changing data.

- Scale the insights you’ve discovered by building data products that deliver value to your business and customers. Schedule execution of notebooks you’ve built or standard Spark jobs – either periodically or based on changes in the underlying data.

- Dynamically provision and scale managed clusters. Seamlessly import data – from S3, local uploads, and a variety of other sources. Fault tolerance, security, and resource isolation provided out of the box. Advanced management and monitoring tools to understand usage.

Databricks is powered by the 100% open source Apache Spark – the most active project in the Big Data ecosystem. Spark lets you explore your data in SQL, Python, Java, or Scala, and integrates machine learning, graph computation, streaming analytics, and interactive queries. The Databricks platform is the best place to develop and deploy Spark applications. Databricks is 100% compatible with the Apache Spark API. This allows users to leverage applications from the rapidly growing Spark ecosystem, and developers to easily develop Spark applications and deploy them on any Certified Spark distribution – in the cloud or on-premise.

The survey results indicate that 13% are already using Spark in production environments with 20% of the respondents with plans to deploy Spark in production environments in 2015, and 31% are currently in the process of evaluating it. In total, the survey covers over 500 enterprises that are using or planning to use Spark in production environments ranging from on-premise Hadoop clusters to public clouds, with data sources including key-value stores, relational databases, streaming data and file systems. Applications range from batch workloads to SQL queries, stream processing and machine learning, highlighting Spark’s unique capability as a simple, unified platform for data processing.

So there is lot of scope for spark with scala combo and its necessary to make everyone hands dirty in spark environment for a better future.

No comments:

Post a Comment